I would suggest storing the DateTime in the table as UTC(which is called Coordinated Universal Time). This time zone is a Standard Time Zone. To avoid timezone complexity in our application.

Herewith, I shared the step-by-step conversion of UTC Date to given Time Zone name date in the SQL Server.

Step 1

Create Time zone Table,

CREATE TABLE [Timezone](

[Id] [int] NOT NULL PRIMARY KEY,

[Identifier] [varchar](100) NULL,

[StandardName] [varchar](100) NULL,

[DisplayName] [varchar](100) NULL,

[DaylightName] [varchar](100) NULL,

[SupportsDaylightSavingTime] [bit] NULL,

[BaseUtcOffsetSec] [int] NULL

)

Step 2 - Insert Time Zone Name

Insert the below given query with 138 timezone with names,

insert into Timezone values(1, NULL,'UTC-11','(UTC-11:00) Coordinated Universal Time-11',NULL,0,-39600)

insert into Timezone values(2, NULL,'Aleutian Standard Time','(UTC-10:00) Aleutian Islands',NULL,0,-36000)

insert into Timezone values(3, NULL,'Hawaiian Standard Time','(UTC-10:00) Hawaii',NULL,0,-36000)

insert into Timezone values(4, NULL,'Marquesas Standard Time','(UTC-09:30) Marquesas Islands',NULL,0,-34200)

insert into Timezone values(5, NULL,'Alaskan Standard Time','(UTC-09:00) Alaska',NULL,0,-32400)

insert into Timezone values(6, NULL,'UTC-09','(UTC-09:00) Coordinated Universal Time-09',NULL,0,-32400)

insert into Timezone values(7, NULL,'Pacific Standard Time (Mexico)','(UTC-08:00) Baja California',NULL,0,-28800)

insert into Timezone values(8, NULL,'UTC-08','(UTC-08:00) Coordinated Universal Time-08',NULL,0,-28800)

insert into Timezone values(9, NULL,'Pacific Standard Time','(UTC-08:00) Pacific Time (US & Canada)',NULL,0,-28800)

insert into Timezone values(10,NULL,'US Mountain Standard Time','(UTC-07:00) Arizona',NULL,0,-25200)

insert into Timezone values(11,NULL,'Mountain Standard Time (Mexico)','(UTC-07:00) Chihuahua, La Paz, Mazatlan',NULL,0,-25200)

insert into Timezone values(12,NULL,'Mountain Standard Time','(UTC-07:00) Mountain Time (US & Canada)',NULL,0,-25200)

insert into Timezone values(13,NULL,'Central America Standard Time','(UTC-06:00) Central America',NULL,0,-21600)

insert into Timezone values(14,NULL,'Central Standard Time','(UTC-06:00) Central Time (US & Canada)',NULL,0,-21600 )

insert into Timezone values(15,NULL,'Easter Island Standard Time','(UTC-06:00) Easter Island',NULL,0,-21600 )

insert into Timezone values(16,NULL,'Central Standard Time (Mexico)','(UTC-06:00) Guadalajara, Mexico City, Monterrey',NULL,0,-21600 )

insert into Timezone values(17,NULL,'Canada Central Standard Time','(UTC-06:00) Saskatchewan',NULL,0,-21600 )

insert into Timezone values(18,NULL,'SA Pacific Standard Time','(UTC-05:00) Bogota, Lima, Quito, Rio Branco',NULL,0,-18000 )

insert into Timezone values(19,NULL,'Eastern Standard Time (Mexico)','(UTC-05:00) Chetumal',NULL,0,-18000)

insert into Timezone values(20,NULL,'Eastern Standard Time','(UTC-05:00) Eastern Time (US & Canada)',NULL,0,-18000 )

insert into Timezone values(21,NULL,'Haiti Standard Time','(UTC-05:00) Haiti', NULL,0,-18000)

insert into Timezone values(22,NULL,'Cuba Standard Time','(UTC-05:00) Havana',NULL,0,-18000)

insert into Timezone values(23,NULL,'US Eastern Standard Time','(UTC-05:00) Indiana (East)',NULL,0,-18000 )

insert into Timezone values(24,NULL,'Turks And Caicos Standard Time','(UTC-05:00) Turks and Caicos',NULL,0,-18000 )

insert into Timezone values(25,NULL,'Paraguay Standard Time','(UTC-04:00) Asuncion',NULL,0,-14400)

insert into Timezone values(26,NULL,'Atlantic Standard Time','(UTC-04:00) Atlantic Time (Canada)',NULL,0,-14400 )

insert into Timezone values(27,NULL,'Venezuela Standard Time','(UTC-04:00) Caracas',NULL,0,-14400)

insert into Timezone values(28,NULL,'Central Brazilian Standard Time','(UTC-04:00) Cuiaba',NULL,0,-14400)

insert into Timezone values(29,NULL,'SA Western Standard Time' ,'(UTC-04:00) Georgetown, La Paz, Manaus, San Juan',NULL,0,-14400 )

insert into Timezone values(30,NULL,'Pacific SA Standard Time','(UTC-04:00) Santiago',NULL,0,-14400)

insert into Timezone values(31,NULL,'Newfoundland Standard Time','(UTC-03:30) Newfoundland',NULL,0,-12600)

insert into Timezone values(32,NULL,'Tocantins Standard Time','(UTC-03:00) Araguaina', NULL,0,-10800)

insert into Timezone values(33,NULL,'E. South America Standard Time','(UTC-03:00) Brasilia',NULL,0,-10800)

insert into Timezone values(34,NULL,'SA Eastern Standard Time' ,'(UTC-03:00) Cayenne, Fortaleza',NULL,0,-10800 )

insert into Timezone values(35,NULL,'Argentina Standard Time','(UTC-03:00) City of Buenos Aires',NULL,0,-10800 )

insert into Timezone values(36,NULL,'Greenland Standard Time','(UTC-03:00) Greenland',NULL,0,-10800)

insert into Timezone values(37,NULL,'Montevideo Standard Time','(UTC-03:00) Montevideo',NULL,0,-10800 )

insert into Timezone values(38,NULL,'Magallanes Standard Time','(UTC-03:00) Punta Arenas',NULL,0,-10800 )

insert into Timezone values(39,NULL,'Saint Pierre Standard Time','(UTC-03:00) Saint Pierre and Miquelon',NULL,0,-10800 )

insert into Timezone values(40,NULL,'Bahia Standard Time','(UTC-03:00) Salvador',NULL,0,-10800 )

insert into Timezone values(41,NULL,'UTC-02','(UTC-02:00) Coordinated Universal Time-02',NULL,0,-7200 )

insert into Timezone values(42,NULL,'Mid-Atlantic Standard Time','(UTC-02:00) Mid-Atlantic - Old',NULL,0,-7200 )

insert into Timezone values(43,NULL,'Azores Standard Time','(UTC-01:00) Azores',NULL,0,-3600)

insert into Timezone values(44,NULL,'Cape Verde Standard Time','(UTC-01:00) Cabo Verde Is.',NULL,0,-3600)

insert into Timezone values(45,NULL,'UTC','(UTC) Coordinated Universal Time',NULL,0,0)

insert into Timezone values(46,NULL,'GMT Standard Time','(UTC+00:00) Dublin, Edinburgh, Lisbon, London',NuLL,0,0)

insert into Timezone values(47,NULL,'Greenwich Standard Time','(UTC+00:00) Monrovia, Reykjavik ',NULL,0,0)

insert into Timezone values(48,NULL,'Sao Tome Standard Time','(UTC+00:00) Sao Tome',NULL,0,0)

insert into Timezone values(49,NULL,'W. Europe Standard Time','(UTC+01:00) Amsterdam, Berlin, Bern, Rome, Stockholm, Vienna',NULL,0,+3600)

insert into Timezone values(50,NULL,'Central Europe Standard Time','(UTC+01:00) Belgrade, Bratislava, Budapest, Ljubljana, Prague',NULL,0,+3600)

insert into Timezone values(51,NULL,'Romance Standard Time','(UTC+01:00) Brussels, Copenhagen, Madrid, Paris',NULL,0,+3600)

insert into Timezone values(52,NULL,'Morocco Standard Time','(UTC+01:00) Casablanca',NULL, 0,+3600)

insert into Timezone values(53,NULL,'Central European Standard Time','(UTC+01:00) Sarajevo, Skopje, Warsaw, Zagreb',NULL,0,+3600)

insert into Timezone values(54,NULL,'W. Central Africa Standard Time','(UTC+01:00) West Central Africa',NULL,0,+3600)

insert into Timezone values(55,NULL,'Jordan Standard Time','(UTC+02:00) Amman',NULL,0,+7200)

insert into Timezone values(56,NULL,'GTB Standard Time','(UTC+02:00) Athens, Bucharest',NULL,0,+7200)

insert into Timezone values(57,NULL,'Middle East Standard Time','(UTC+02:00) Beirut',NULL,0,+7200)

insert into Timezone values(58,NULL,'Egypt Standard Time','(UTC+02:00) Cairo',NULL,0,+7200)

insert into Timezone values(59,NULL,'E. Europe Standard Time','(UTC+02:00) Chisinau',NULL,0,+7200)

insert into Timezone values(60,NULL,'Syria Standard Time','(UTC+02:00) Damascus',NULL,0,+7200)

insert into Timezone values(61,NULL,'West Bank Standard Time','(UTC+02:00) Gaza, Hebron',NULL,0,+7200)

insert into Timezone values(62,NULL,'South Africa Standard Time','(UTC+02:00) Harare, Pretoria',NULL,0,+7200)

insert into Timezone values(63,NULL,'FLE Standard Time','(UTC+02:00) Helsinki, Kyiv, Riga, Sofia, Tallinn, Vilnius',NULL,0,+7200)

insert into Timezone values(64,NULL,'Israel Standard Time','(UTC+02:00) Jerusalem',NULL,0,+7200)

insert into Timezone values(65,NULL,'Kaliningrad Standard Time','(UTC+02:00) Kaliningrad',NULL,0,+7200)

insert into Timezone values(66,NULL,'Sudan Standard Time','(UTC+02:00) Khartoum',NULL,0,+7200)

insert into Timezone values(67,NULL,'Libya Standard Time','(UTC+02:00) Tripoli',NULL,0,+7200)

insert into Timezone values(68,NULL,'Namibia Standard Time','(UTC+02:00) Windhoek',NULL,0,+7200)

insert into Timezone values(69,NULL,'Arabic Standard Time','(UTC+03:00) Baghdad',NULL,0,+10800)

insert into Timezone values(70,NULL,'Turkey Standard Time' ,'(UTC+03:00) Istanbul',NULL,0,+10800)

insert into Timezone values(71,NULL,'Arab Standard Time','(UTC+03:00) Kuwait, Riyadh',NULL,0,+10800)

insert into Timezone values(72,NULL,'Belarus Standard Time','(UTC+03:00) Minsk',NULL,0,+10800)

insert into Timezone values(73,NULL,'Russian Standard Time','(UTC+03:00) Moscow, St. Petersburg',NULL,0,+10800)

insert into Timezone values(74,NULL,'E. Africa Standard Time','(UTC+03:00) Nairobi',NULL,0,+10800)

insert into Timezone values(75,NULL,'Iran Standard Time','(UTC+03:30) Tehran',NULL,0,+12600 )

insert into Timezone values(76,NULL,'Arabian Standard Time','(UTC+04:00) Abu Dhabi, Muscat',NULL,0,+14400)

insert into Timezone values(77,NULL,'Astrakhan Standard Time','(UTC+04:00) Astrakhan, Ulyanovsk',NULL,0,+14400)

insert into Timezone values(78,NULL,'Azerbaijan Standard Time','(UTC+04:00) Baku',NULL,0,+14400)

insert into Timezone values(79,NULL,'Russia Time Zone 3','(UTC+04:00) Izhevsk, Samara',NULL,0,+14400)

insert into Timezone values(80,NULL,'Mauritius Standard Time','(UTC+04:00) Port Louis',NULL,0,+14400)

insert into Timezone values(81,NULL,'Saratov Standard Time','(UTC+04:00) Saratov',NULL,0,+14400)

insert into Timezone values(82,NULL,'Georgian Standard Time','(UTC+04:00) Tbilisi',NULL,0,+14400)

insert into Timezone values(83,NULL,'Volgograd Standard Time','(UTC+04:00) Volgograd',NULL,0,+14400)

insert into Timezone values(84,NULL,'Caucasus Standard Time','(UTC+04:00) Yerevan',NULL,0,+14400)

insert into Timezone values(85,NULL,'Afghanistan Standard Time','(UTC+04:30) Kabul',NULL,0,+16200)

insert into Timezone values(86,NULL,'West Asia Standard Time','(UTC+05:00) Ashgabat, Tashkent',NULL,0,+18000)

insert into Timezone values(87,NULL,'Ekaterinburg Standard Time','(UTC+05:00) Ekaterinburg',NULL,0,+18000)

insert into Timezone values(88,NULL,'Pakistan Standard Time','(UTC+05:00) Islamabad, Karachi',NULL,0,+18000)

insert into Timezone values(89,NULL,'Qyzylorda Standard Time','(UTC+05:00) Qyzylorda',NULL,0,+18000)

insert into Timezone values(90,NULL,'India Standard Time','(UTC+05:30) Chennai, Kolkata, Mumbai, New Delhi',NULL,0,+19800)

insert into Timezone values(91,NULL,'Sri Lanka Standard Time','(UTC+05:30) Sri Jayawardenepur',NULL,0,+19800)

insert into Timezone values(92,NULL,'Nepal Standard Time','(UTC+05:45) Kathmandu',NULL,0,+20700)

insert into Timezone values(93,NULL,'Central Asia Standard Time','(UTC+06:00) Astana',NULL,0,+21600)

insert into Timezone values(94,NULL,'Bangladesh Standard Time','(UTC+06:00) Dhaka',NULL,0,+21600)

insert into Timezone values(95,NULL,'Omsk Standard Time','(UTC+06:00) Omsk',NULL,0,+21600)

insert into Timezone values(96,NULL,'Myanmar Standard Time','(UTC+06:30) Yangon (Rangoon)',NULL,0,+23400)

insert into Timezone values(97,NULL,'SE Asia Standard Time','(UTC+07:00) Bangkok, Hanoi, Jakarta',NULL,0,+25200)

insert into Timezone values(98,NULL,'Altai Standard Time','(UTC+07:00) Barnaul, Gorno-Altaysk',NULL,0,+25200)

insert into Timezone values(99,NULL,'W. Mongolia Standard Time','(UTC+07:00) Hovd',NULL,0,+25200)

insert into Timezone values(100,NULL,'North Asia Standard Time','(UTC+07:00) Krasnoyarsk',NULL,0,+25200)

insert into Timezone values(101,NULL,'N. Central Asia Standard Time','(UTC+07:00) Novosibirsk',NULL,0,+25200)

insert into Timezone values(102,NULL,'Tomsk Standard Time','(UTC+07:00) Tomsk',NULL,0,+25200)

insert into Timezone values(103,NULL,'China Standard Time','(UTC+08:00) Beijing, Chongqing, Hong Kong, Urumqi',NULL,0,+28800)

insert into Timezone values(104,NULL,'North Asia East Standard Time','(UTC+08:00) Irkutsk',NULL,0,+28800)

insert into Timezone values(105,NULL,'Singapore Standard Time','(UTC+08:00) Kuala Lumpur, Singapore',NULL,0,+28800 )

insert into Timezone values(106,NULL,'W. Australia Standard Time','(UTC+08:00) Perth',NULL,0,+28800)

insert into Timezone values(107,NULL,'Taipei Standard Time','(UTC+08:00) Taipei',NULL,0,+28800)

insert into Timezone values(108,NULL,'Ulaanbaatar Standard Time','(UTC+08:00) Ulaanbaatar',NULL,0,+28800)

insert into Timezone values(109,NULL,'Aus Central W. Standard Time','(UTC+08:45) Eucla',NULL,0,+31500)

insert into Timezone values(110,NULL,'Transbaikal Standard Time','(UTC+09:00) Chita',NULL,0,+32400 )

insert into Timezone values(111,NULL,'Tokyo Standard Time','(UTC+09:00) Osaka, Sapporo, Tokyo',NULL,0,32400)

insert into Timezone values(112,NULL,'North Korea Standard Time','(UTC+09:00) Pyongyang',NULL,0,+32400)

insert into Timezone values(113,NULL,'Korea Standard Time','(UTC+09:00) Seoul',NULL,0,+32400)

insert into Timezone values(114,NULL,'Yakutsk Standard Time','(UTC+09:00) Yakutsk',NULL,0,+32400)

insert into Timezone values(115,NULL,'Cen. Australia Standard Time','(UTC+09:30) Adelaide',NULL,0,+34200)

insert into Timezone values(116,NULL,'AUS Central Standard Time','(UTC+09:30) Darwin',NULL,0,+34200)

insert into Timezone values(117,NULL,'E. Australia Standard Time','(UTC+10:00) Brisbane',NULL,0,+36000)

insert into Timezone values(118,NULL,'AUS Eastern Standard Time','(UTC+10:00) Canberra, Melbourne, Sydney',NULL,0,+36000)

insert into Timezone values(119,NULL,'West Pacific Standard Time','(UTC+10:00) Guam, Port Moresby',NULL,0,+36000)

insert into Timezone values(120,NULL,'Tasmania Standard Time','(UTC+10:00) Hobart',NULL,0,+36000)

insert into Timezone values(121,NULL,'Vladivostok Standard Time','(UTC+10:00) Vladivostok',NULL,0,+36000)

insert into Timezone values(122,NULL,'Lord Howe Standard Time','(UTC+10:30) Lord Howe Island',NULL,0,+37800)

insert into Timezone values(123,NULL,'Bougainville Standard Time','(UTC+11:00) Bougainville Island',NULL,0,+39600)

insert into Timezone values(124,NULL,'Russia Time Zone 10','(UTC+11:00) Chokurdakh',NULL,0,+39600)

insert into Timezone values(125,NULL,'Magadan Standard Time','(UTC+11:00) Magadan',NULL,0,+39600)

insert into Timezone values(126,NULL,'Norfolk Standard Time','(UTC+11:00) Norfolk Island',NULL,0,+39600)

insert into Timezone values(127,NULL,'Sakhalin Standard Time','(UTC+11:00) Sakhalin',NULL,0,+39600)

insert into Timezone values(128,NULL,'Central Pacific Standard Time','(UTC+11:00) Solomon Is., New Caledonia',NULL,0,+39600)

insert into Timezone values(129,NULL,'Russia Time Zone 11','(UTC+12:00) Anadyr, Petropavlovsk-Kamchatsky',NULL,0,+43200)

insert into Timezone values(130,NULL,'New Zealand Standard Time','(UTC+12:00) Auckland, Wellington',NULL,0,+43200)

insert into Timezone values(131,NULL,'UTC+12','(UTC+12:00) Coordinated Universal Time+12',NULL,0,+43200)

insert into Timezone values(132,NULL,'Fiji Standard Time','(UTC+12:00) Fiji',NULL,0,+43200)

insert into Timezone values(133,NULL,'Kamchatka Standard Time','(UTC+12:00) Petropavlovsk-Kamchatsky - Old',NULL,0,+43200)

insert into Timezone values(134,NULL,'Chatham Islands Standard Time','(UTC+12:45) Chatham Islands',NULL,0,+45900)

insert into Timezone values(135,NULL,'UTC+13','(UTC+13:00) Coordinated Universal Time+13',NULL,0,+46800)

insert into Timezone values(136,NULL,'Tonga Standard Time','(UTC+13:00) Nuku alofa',NULL,0,+46800)

insert into Timezone values(137,NULL,'Samoa Standard Time','(UTC+13:00) Samoa',NULL,0,+46800)

insert into Timezone values(138,NULL,'Line Islands Standard Time','(UTC+14:00) Kiritimati Island',NULL,0,+50400)

Step 3 - Create a function for Convert UTC timezone to any given timezone

Pass the UTC DateTime and timezone in the function,

CREATE FUNCTION [dbo].[ConvertUTCtoLocal]

(

-- Add the parameters for the function here

@utcDateTime DATETIME,

@strTimeZoneName varchar(100)

)

RETURNS Datetime

AS

BEGIN

-- Declare the return variable here

--the original date

DECLARE @m_createddate as Datetime ,@BaseUtcOffsetSec AS INT

SELECT @BaseUtcOffsetSec =BaseUtcOffsetSec FROM Timezone WHERE StandardName=@strTimeZoneName

-- Return the result of the function

select @m_createddate=DATEADD(SECOND, @BaseUtcOffsetSec,@utcDateTime)

RETURN @m_createddate

END

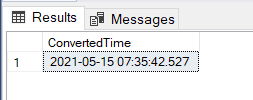

Step 4

Result using User Defined Function of SQL,

SELECT [dbo].[ConvertUTCtoLocal] (GETUTCDATE(),'Central Standard Time')

HostForLIFEASP.NET SQL Server 2019 Hosting