November 29, 2023 07:07 by

Peter

PeterThe ability to successfully handle and evaluate time-sensitive information is critical in today's data-driven environment. This is especially true in businesses like finance, IoT, and healthcare, which generate massive amounts of time-stamped data on a daily basis. Traditional relational databases frequently struggle to meet the needs of storing and retrieving such data, resulting in the development of specialist systems known as Time Series Databases (TSDBs).

What exactly are Time Series Databases?

TSDBs are specifically built to excel at keeping and interpreting time-indexed data points, providing optimal performance for time series data. They have a number of advantages over regular databases.

- Efficient Data Storage: TSDBs use specific data structures and compression techniques to efficiently store time series data, reducing storage requirements.

- Optimized Querying: TSDBs provide sophisticated query languages designed specifically for time series analysis, allowing users to retrieve and analyze data fast based on time ranges, aggregations, and other criteria.

- Real-time Analytics: TSDBs are built to handle real-time data input and analysis, allowing businesses to monitor and respond to events as they happen.

Time Series Databases That Are Popular

Several popular TSDBs have evolved, each with its own distinct set of features and advantages. Here are some noteworthy examples.

InfluxDB

An open-source TSDB is well-known for its fast querying capabilities and support for massive amounts of time-stamped data.

# Sample InfluxDB Query

from influxdb import InfluxDBClient

client = InfluxDBClient(host='localhost', port=8086)

client.switch_database('mydatabase')

result = client.query("SELECT * FROM mymeasurement WHERE time > now() - 1d")

print(result)

Prometheus

A monitoring and alerting toolkit that excels in collecting and storing metrics using a multidimensional data model and a powerful query language (PromQL).

# Sample PromQL Query

http_requests_total{job="api-server", status="200"}

Elasticsearch

While primarily known as a full-text search and analytics engine, Elasticsearch also offers robust time series data capabilities, handling large-scale time series data with its distributed architecture.

# Sample Elasticsearch Query

{

"query": {

"range": {

"@timestamp": {

"gte": "now-1d/d",

"lt": "now/d"

}

}

}

}

OpenTSDB

Built on top of the Hadoop Distributed File System (HDFS), OpenTSDB is designed for scalability, leveraging HBase for storing and retrieving time series data, making it suitable for large-scale deployments.

# Sample OpenTSDB Query

tsdquery summary -start 1h-ago -end now -m avg:metric_name

Graphite

A lightweight TSDB that focuses on simplicity and ease of use supporting the Graphite Query Language (GQL) and is well-suited for small to medium-sized deployments.

# Sample Graphite Query

summarize(metric_name, "1h", "sum")

Use Cases of Time Series Databases

TSDBs find applications across a wide range of industries and use cases.

- Financial Analytics: Analyzing historical market data, tracking transactions, and predicting trends are essential for financial institutions. TSDBs enable real-time monitoring of stock prices, currency exchange rates, and other financial metrics.

- IoT Data Management: With the proliferation of IoT devices, TSDBs are instrumental in handling the vast amount of data generated by sensors and devices. These databases enable organizations to monitor and analyze data from IoT devices in real-time, leading to informed decision-making.

- Infrastructure Monitoring: TSDBs find extensive use in monitoring and managing the performance of IT infrastructure. They help organizations track metrics related to server health, network latency, and application response times, facilitating proactive issue detection and resolution.

- Healthcare Systems: In healthcare, time series databases are employed to store and analyze patient data, monitor vital signs, and track the efficacy of treatments over time. These databases contribute to improved patient care and the advancement of medical research.

Conclusion

Time series databases have become indispensable tools in the modern data landscape, offering specialized solutions for handling the unique challenges posed by time-stamped data. From monitoring and analytics to financial modeling and IoT applications, the use cases for TSDBs continue to expand as the volume of time series data generated across industries continues to grow.

Each database mentioned here brings its own strengths to the table, catering to diverse needs in terms of scalability, performance, and ease of use. As organizations strive to harness the power of their time-series data, TSDBs will play an increasingly crucial role in enabling data-driven decision-making and unlocking new insights.

HostForLIFEASP.NET SQL Server 2022 Hosting

November 14, 2023 06:59 by

Peter

PeterIf you've ever seen the error message

"The query has been canceled because its estimated cost (X) exceeds the configured X-1 threshold." "Speak with the system administrator."

You might be wondering what a SQL Server query means and how to remedy it when running it. In this essay, I'll look at the source of the problem and how to solve it.

What is the query governor's cost limit?

The query governor cost limit is a SQL Server setting that specifies the maximum time for a query to run. The duration, stated in seconds, is based on an approximation of the query cost for a specific hardware configuration. This setting is intended to prevent persistent queries from consuming too many resources and interfering with the functioning of other queries and applications.

Because the query governor cost limit is set to 3000 by default, any query that is projected to take more than 3000 seconds—or 50 minutes—will be canceled. This value can be changed by the system administrator using the stored function sp_configure.

Why am I getting the error?

If your query has a high expected cost and is too complex or inefficient, you may receive an error warning. The number and size of the tables involved, the indexes and statistics available, the join and aggregation techniques used, the filters and sorting algorithms used, and the SQL Server version and edition all have an impact on how much a query is estimated to cost.

Running a query that entails scanning a large table with no indexes or filters, for example, could result in a high estimated cost. Similarly, performing a query that calls a function or subquery for each row in the return set could result in an extremely high projected cost.

How do I fix the mistake?

The two most likely remedies are to increase the query governor's cost limit or optimize your query. Increase the query governor's cost limit: To increase the query governor cost limit for the active connection, use the SET QUERY_GOVERNOR_COST_LIMIT command. For example, if the estimated cost of the query is 14.000, you may execute the following line before running the query:

SET QUERY_GOVERNOR_COST_LIMIT 15000

Here is an example of how to utilize it:

using Microsoft.Data.SqlClient;

using System.Data;

var connectionString = "Server=YourServerName;Database=YourDatabaseName;User=YourUserName;Password=YourPassword;";

// Create a SqlConnection and SqlCommand

using SqlConnection connection = new SqlConnection(connectionString);

connection.Open();

// Set the QUERY_GOVERNOR_COST_LIMIT before executing the query

var setQueryGovernorCostLimit = "SET QUERY_GOVERNOR_COST_LIMIT 15000;";

using (SqlCommand setQueryGovernorCommand = new SqlCommand(setQueryGovernorCostLimit, connection))

{

setQueryGovernorCommand.ExecuteNonQuery();

}

// Complex SELECT query

var selectQuery = @"

SELECT

Orders.OrderID,

Customers.CustomerName,

Cars.CarModel,

Colors.ColorName,

Engines.EngineType,

Wheels.WheelType,

Interiors.InteriorType,

Accessories.AccessoryName,

Features.FeatureName,

Options.OptionName,

-- Add more fields related to the customized automobiles here

FROM

Orders

INNER JOIN Customers ON Orders.CustomerID = Customers.CustomerID

INNER JOIN Cars ON Orders.CarID = Cars.CarID

INNER JOIN Colors ON Orders.ColorID = Colors.ColorID

INNER JOIN Engines ON Orders.EngineID = Engines.EngineID

INNER JOIN Wheels ON Orders.WheelID = Wheels.WheelID

INNER JOIN Interiors ON Orders.InteriorID = Interiors.InteriorID

LEFT JOIN OrderAccessories ON Orders.OrderID = OrderAccessories.OrderID

LEFT JOIN Accessories ON OrderAccessories.AccessoryID = Accessories.AccessoryID

LEFT JOIN CarFeatures ON Cars.CarID = CarFeatures.CarID

LEFT JOIN Features ON CarFeatures.FeatureID = Features.FeatureID

LEFT JOIN CarOptions ON Cars.CarID = CarOptions.CarID

LEFT JOIN Options ON CarOptions.OptionID = Options.OptionID

WHERE

-- Your filter conditions for customizations or specific orders here

";

using SqlCommand command = new SqlCommand(selectQuery, connection);

using SqlDataAdapter adapter = new SqlDataAdapter(command);

DataTable dataTable = new DataTable();

adapter.Fill(dataTable);

This will prevent the cancellation of your query. This method is not advised because it can impact the performance of other queries and apps. Additionally, this statement needs to be executed for each connection that requires the query to be done.

- Optimize your query: Reducing the anticipated cost of your query optimization is a preferable course of action. The following methods can help you enhance the efficiency of your queries.

- Use indexes: By reducing the amount of scanning and sorting necessary, indexes can help SQL Server locate the data more quickly. The columns used in your query's join, filter, and order by clauses can all have indexes created on them. The Database Engine Tuning Advisor can also recommend the best indexes for your query.

- Use statistics: SQL Server can estimate the number of rows and value distribution in tables with statistics. This can assist the SQL Server in selecting the query's optimal execution strategy. The UPDATE STATISTICS statement and the sp_updatestats stored procedure can be used to update the statistics in your tables.

- Simplify your query: You can make your query simpler by eliminating any extraneous tables, joins, filters, columns, or sorting. Your query can be rewritten to take advantage of more effective operators, like EXISTS rather than IN, or it can be divided into shorter searches using temporary tables or common table expressions (CTEs).

- Use the latest SQL Server version and edition: Use the most recent edition and version of SQL Server: These may offer improved performance and optimization features over previous iterations. For instance, the projected cost of your query for SQL Server 2022 Web Edition can be less than that of SQL Server 2019 Web Edition. The @@VERSION function allows you to verify your database's SQL Server version and edition.

The query governor cost limit error is a common problem in SQL Server that occurs when your query exceeds the configured threshold and has a high estimated cost. Increasing the query governor cost limit or optimizing the query are two solutions to this problem. Nonetheless, query optimization is the ideal option because it can improve query performance and efficiency while avoiding potential resource issues.

HostForLIFEASP.NET SQL Server 2022 Hosting

November 1, 2023 08:28 by

Peter

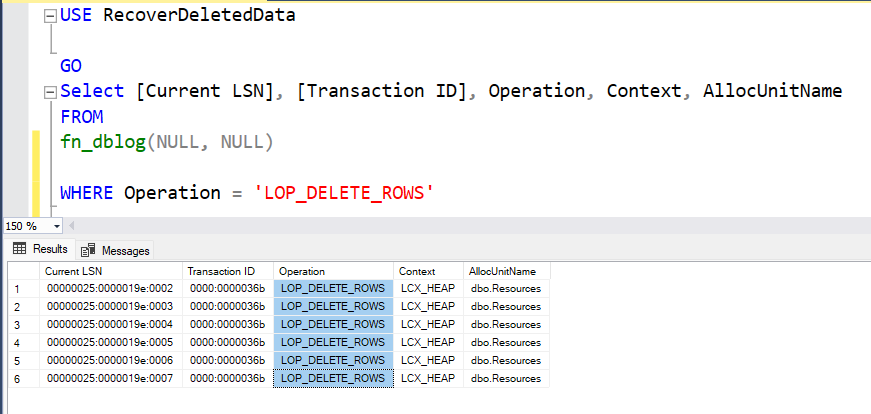

PeterIn this piece, we'll go over LSN, the Retrieved function fn_dblog(), and the Recovery Models that go with it in further depth.

Log of SQL Server Transactions

Every SQL Server database has a transaction log that records all transactions as well as the database changes made by each transaction. The transaction log is a crucial component of the database, and if there is a system failure, the transaction log may be necessary to restore consistency to your database.

The SQL Server Transaction Log is useful for recovering deleted or updated data if you run a DELETE or UPDATE operation with the incorrect condition or without filters. This is accomplished by listening to the records included within the SQL Server Transaction Log file. DML operations such as INSERT, UPDATE, and DELETE statements, as well as DDL operations such as CREATE and DROP statements, are among the events that are written to the SQL Server Transaction Log file without any additional configuration from the database administrator.

LSN Function Retrieving from Transaction Log

fn_dblog() is a function.

The fn_dblog() function is one of the SQL Server undocumented functions; it allows you to see transaction log records in the active section of the transaction log file.

fn_dblog() Arguments

The fn_dblog() function takes two arguments.

- The first is the initial log sequence number, abbreviated as LSN. You can alternatively use NULL to return everything from the beginning of the log.

- The second is the LSN at the conclusion. You can alternatively specify NULL, which indicates that you wish to return everything to the log's conclusion.

fn_db_log()

SELECT * FROM fn_dblog (

NULL, -- Start LSN nvarchar(25)

NULL -- End LSN nvarchar(25)

)

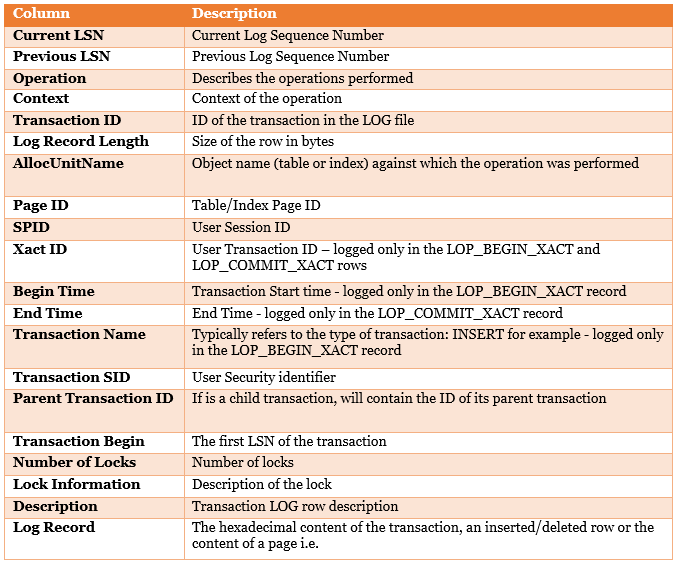

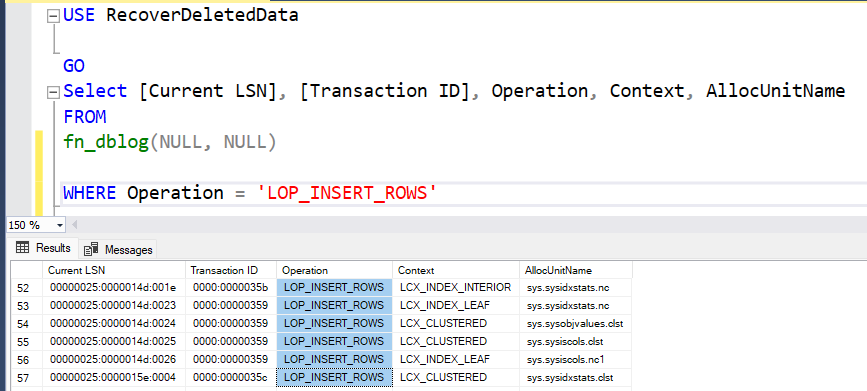

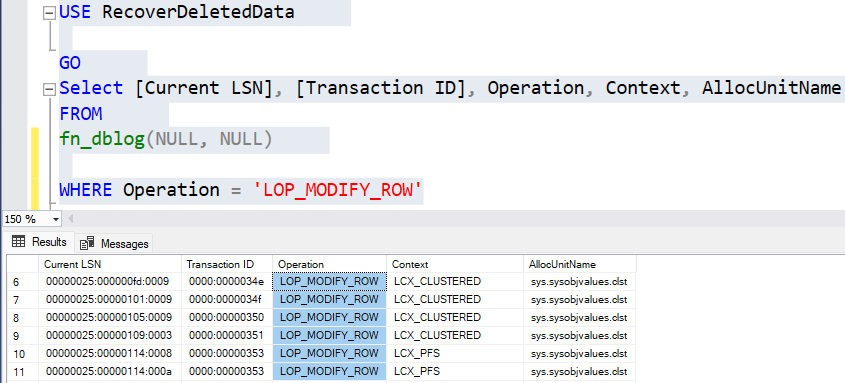

We will not discuss the details of the function but list the possibly useful columns below:

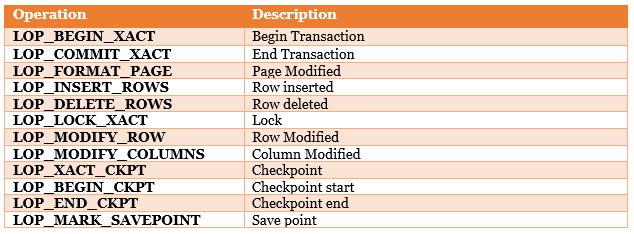

Operation Clumn in fn_dblog()

The ‘Operation’ column indicates the type of operation that has been performed and logged in the transaction log file.

Such as test.

LOP_INSERT_ROWS

LOP_MODIFY_ROW

LOP_DELETE_ROWS

LOP_BEGIN_XACT

Recovery Models

SQL Server backup and restore operations occur within the context of the recovery model of the database. Recovery models are designed to control transaction log maintenance. A recovery model is a database property that controls how transactions are logged, whether the transaction log requires (and allows) backing up, and what kinds of restore operations are available.

Three recovery models exist:

Simple --- Operations that require transaction log backups are not supported by the simple recovery model.

Full, and

Bulk-logged.

Typically, a database uses the full recovery model or simple recovery model. A database can be switched to another recovery model at any time. By default, when a new database is created within Microsoft SQL, the recovery model option is set to full.

Recovery Option

Restoring the db with the RECOVERY option is the default option for users with FULL backup. However, if you have different types of backups (differential, transactional, etc.), you may need to use the NORECOVERY option to combine the backups.

Even Recover database without Restore

RESTORE DATABASE *database_name* WITH RECOVERY

HostForLIFEASP.NET SQL Server 2022 Hosting